The Social Media Election

In the 2016 US Presidential election, it is clear that Facebook has become a major platform for political discussion. Facebook allows users to voice their own opinions and share information from non-mainstream news sources that could otherwise go unnoticed. Additionally, users who already use the site on a daily basis are increasingly reliant on it as a news source as well. In fact, a study done by the Pew Research Center in 2015 found that 61% of millennials use Facebook as their main source for political news, as compared to only 51% of Generation Xers and 39% of Baby Boomers. Yet, obtaining political news from social media raises questions of legitimacy, and users should learn to take the news they get from Facebook with a grain of salt.

One glaring point of mistrust is first-hand posts on Facebook. All Facebook users have the ability to write a post expressing their opinions. However, there’s no way to determine the accuracy of the post, and problems arise when users trust a post’s assertions without further research. Paradoxically, a large number of views or shares does not equate to legitimacy, which may be counterintuitive. This can apply to videos and articles posted on other websites as well. Just because an article is well-written, or because a video is filmed well, does not mean it’s accurate. In times of an election, these unreliable sources may circulate misleading or untrue information about the candidates, yet they can’t be removed from social media platforms simply for that reason. With such a significant amount of material circulated through Facebook every day, it’s impossible to expect users to manually discern which are legitimate and which aren’t. This places the responsibility on Facebook. Google New’s fact check feature is a good source of inspiration. In early October, Google introduced the fact checker to help users filter reliable from unreliable articles. While it’d involve bigger risks for Facebook to monitor personal posts, they could address viral videos and articles.

Yet unreliable sources per se are not to blame for all of where and how people access convoluted information. Some users already know that Facebook gathers their basic information and tracks their ad preferences, yet most don’t know the extent of this collection. Facebook categorizes users as liberal, conservative, or moderate, and adjusts their timelines accordingly. (Check out the interactive feature by WSJ here.) They determine one’s preferences through a number of factors; for instance, the leaning of pages liked, the types of articles read, and location, age, and political activity on Facebook. While Facebook presumably has user-friendliness optimization and commercial before political interests in mind, this tool of censorship raises concerns about transparency. Without knowing that a newsfeed is filtered into the “liberal” or “conservative” stream, a user may genuinely think what they’re seeing is an average consensus. Users may also be underexposed to articles of the opposite leaning. Albeit some users may favour seeing only personally relevant information, Facebook shouldn’t assume this is the case for everyone. Facebook should notify users of their classification, and give them the option to choose which type of news feed they prefer.

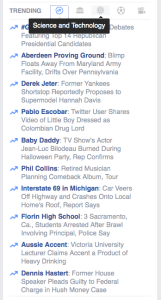

Doubts regarding Facebook’s supposedly unbiased stance have surfaced as well. Earlier this year, former Facebook “news curators” claimed that they were allowed to manipulate the “trending topics” section on Facebook. Facebook is a news agglomerate, not a firsthand news source. This gives Facebook almost unchecked authority to reconfigure the trending topics according to the organization’s self-interests or the user’s personal preferences. Most Facebook users assume that the stories in the trending topics section emerge naturally, based off what’s getting the most traffic. In reality, the streaming is generated by both algorithms and the news curators, and then customized to each user’s preferences. One Facebook news curator confessed that conservative news was frequently suppressed. “I’d come on shift and I’d discover that CPAC or Mitt Romney or Glenn Beck or other popular conservative topics wouldn’t be trending,” stated a former news curator. Another curator claimed, “It was absolutely bias. We were doing it subjectively. It just depends on who the curator is and the what time of day it is.” In response, Facebook denied the claims, reasserting their guarantee of a neutral, unbiased stance. They also changed the trending topics description to show the number of people taking about each topic, instead of a short summary about the topic which could be open to the interpretation of the news curator writing it. Whether Facebook is truly neutral or not, it has no legal obligation to remaining neutral. As long as the news curators for the trending topics are actual people and not fully automated, it’s reasonable to expect at least a slight bias.

This is not to argue that all political discussions on Facebook are bad nor Facebook is to singlehandedly blame for media governance. Seeing varied opinions on issues helps raise voters’ awareness to the issue, and assist them in formulating their own opinions. Having politically active Facebook friends may also increase a voter’s likeliness to vote. In fact, a study on the 2010 off-year election found that the “I Voted” button feature on Facebook may have increased voted turnout by 340,000 people. As Facebook continues to grow as a major reference for news, it must acknowledge its social responsibility and accordingly strive to provide users with the most reliable, un-convoluted news.