The Flaw in Global University Rankings

Choosing a university is undoubtedly one of the biggest and most life-changing decisions for any student. Fortunately, global university rankings allow them to evaluate and compare schools based on academic quality. Accordingly, 23.5 per cent of students actually believe the rank is the single most important factor when choosing a university.

Rankings are even more crucial for international students, as local counsellors, advisors, and relatives are often unable to provide actionable advice. Nevertheless, universities value these students as they represent considerable sources of income and increased diversity. Online information, such as international rankings, is therefore a precious, and in some cases the only, tool for international students when choosing a university. This information can even be the deciding factor for students making their choice, considering the pre-existing difficulties of studying abroad, like language barriers or visa issues. This is especially true for McGill University, as 27 per cent of McGill’s students are international, coming from more than 150 countries.

Because of their many flaws, international rankings should not be taken as seriously by students. Understanding them shows how arbitrary they really are, in addition to perpetuating in the increased competitiveness and commercialisation of the higher education industry.

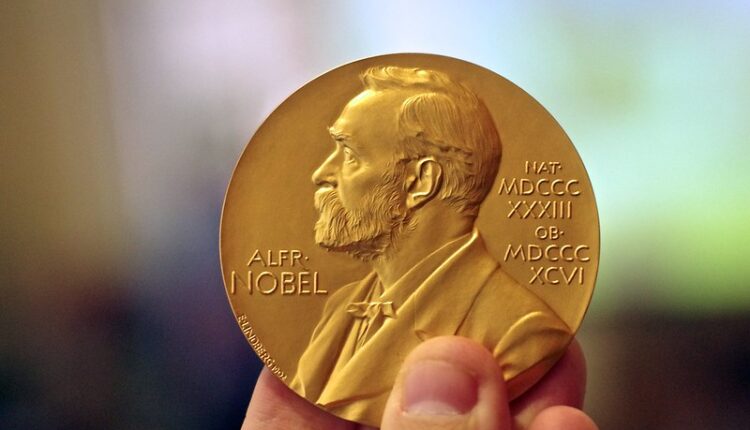

The three most important and influential global rankings are the Quacquarelli Symonds World University Rankings (QS), the Academic Ranking of World Universities (ARWU) or Shanghai Ranking, and the Times Higher Education World University Rankings (THE). These rankings systems evaluate and compare academic institutions from around the world, using similar indicators like surveys, ratios, publications, and prizes. The rankings are then published annually and made freely accessible to students, universities, and employers. For example, McGill University was ranked 40th worldwide in the 2021 Times ranking, published on Sept. 2, 2020.

Understanding University Rankings

Global university rankings methodologies are surprisingly homogenous. Each considers approximately five simple metrics that are assigned different weights based on their respective importance. Essentially, these dimensions of quality are education, teaching, research, citations, and international outlook. Education is often given the biggest weight, as far as 40 per cent in the case of QS. Some differences do exist between rankings, like the importance given to the research dimension. THE goes so far as to allocate a 60 per cent weight to the research output of a university, further subdivided into reputation, volume, income, and influence. Nevertheless, the actual variations between rankings are not the result of different metrics or their weights, but instead, the way they are calculated.

Surveys, ratios, publications, and prizes are used by QS, ARWU and THE to determine the quality of a university’s metric, such as research. It is the ranking’s responsibility to decide which indicator is more suitable to appraise an institution’s quality. For example, to assess the quality of teaching, THE uses both surveys and ratios, while ARWU recognizes the number of Nobel Prizes and Fields Medals in a university’s alumni network. Still, the use of these indicators raises several flaws and problems tied to the nature of such indexes.

First, “reputation surveys,” accounting for 33 per cent of THE and half of QS, are unreliable indicators of an institution’s quality. This is in part due to the fact that past affiliations, nationalities, and current specializations of the surveys’ participants are not taken into account, evidently creating a bias in the final rankings. Furthermore, QS records and uses its respondents’ answers for up to five years, allowing inactive or retired professionals’ say to make a difference. This is one reason QS achieved to build the “world’s largest survey of academic opinion.”

Finally, reputation surveys are in fact a measure of a university’s research output. Indeed, by increasing its number of publications and investing in research, universities ensure that the maximum number of professionals will know about the institution. Reputation is therefore quite arbitrary, and should not overshadow other factors when choosing a university such as location, social life, or cost.

Second, ratios are not indicators of teaching excellence. Rankings like QS use “data scraping”, meaning they source the numbers used for ratios from various university websites. This has caused a number of problems when calculating faculty/student ratios, accounting for 20 per cent of QS’s ranking. For example, it has been found that QS mistakenly added gardeners and cleaners to the number of faculty teachers for a particular university. In addition, ratios are also a measure of research activity, as no difference is made between teachers and researchers, meaning a university with a high research output would have a positive ratio.

Third, prizes and publications are not indicators of quality education. These criteria are again linked to research, as a good researcher is not necessarily a good teacher. Furthermore, ARWU only takes into account Nobel Prizes and Fields Medals, two western-oriented honours, undermining foreign research. Attributing 30 per cent to these prizes only promotes occidental academic culture, to the detriment of overseas universities, already underrepresented in the education industry. Thus, international rankings increase visibility to those who need it the least: North-American universities already dominating the higher education system.

When observed closely, 100 per cent of ARWU’s indicators are actually research-related, favouring high research-output universities. This focus on research forces universities to adapt if they want to remain competitive. For example, the Paris-Saclay cluster jumped to the 14th position in ARWU’s latest ranking, by regrouping 14 different institutions and 280 laboratories. This success is in part possible due to the fact that no adjustments related to size are made by the Shanghai ranking.

Beyond choosing the right school, observing global university rankings methodologies also helps students understand McGill’s rank, between 78th for ARWU and 31st for QS. McGill University first possesses some advantages due to its size and foundation year. As it closes in on its bicentennial, McGill enjoys a large network of 275 000 alumni, thanks to its 40 000 students. Eight Nobel Prize winners can be found in this network, such as John O’Keefe, PhD ’67, who received the 2014 Nobel Prize in Medicine.

McGill University also completely understands the importance of research activity for university rankings. With more than 1700 tenured or tenure-track faculty members, McGill is committed to “research excellence” and envisions to be “the premier research-intensive university in Canada.” This commitment certainly helps McGill maintain and improve its ranking over the years. Ultimately, university rankings such as McGill’s, though helpful for contextualization, focus on factors that are not necessarily relevant to students —understanding this is the first step towards re-examining how students should be considering such a critical choice.

The featured image, McGill University by Edward Bilodeau is licensed for use under CC BY-NC-ND 2.0. No changes were made.

Edited by Emma Frattasio