PART II: Neo-Luddites and the Era of AI

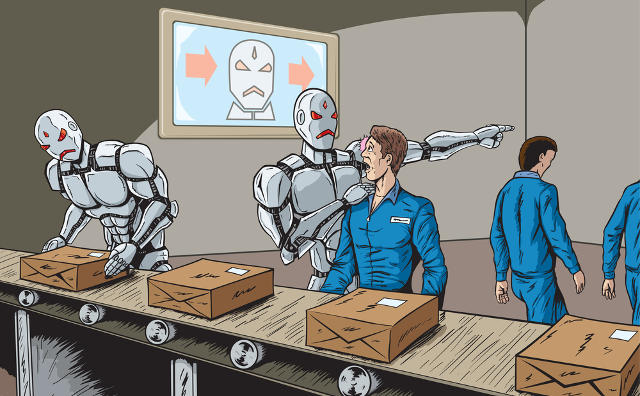

“Robot job takeover unemployment” by Qniksefat is licensed under CC-BY-SA-4.0.

“Robot job takeover unemployment” by Qniksefat is licensed under CC-BY-SA-4.0.

This article is part of an MIR series on the ethics of AI. To read Part I, click here.

In 2022, the size of the Artificial Intelligence (AI) market was valued at $119.78 billion, and is expected to rise by an astounding compound annual growth rate (CAGR) of 38.1 per cent by 2030. Growth of the AI industry undoubtedly opens exciting new frontiers, but could prevent much of society from reaping its benefits. New AI technologies are often haphazardly implemented, lacking adequate ethical and legal measures, creating inequalities in the ability to swiftly adapt to technological advancements. As technology drives several jobs into extinction, it is worth questioning whether AI companies truly care about the livelihoods of the general population. Are these technologies doing more harm than good for the average citizen?

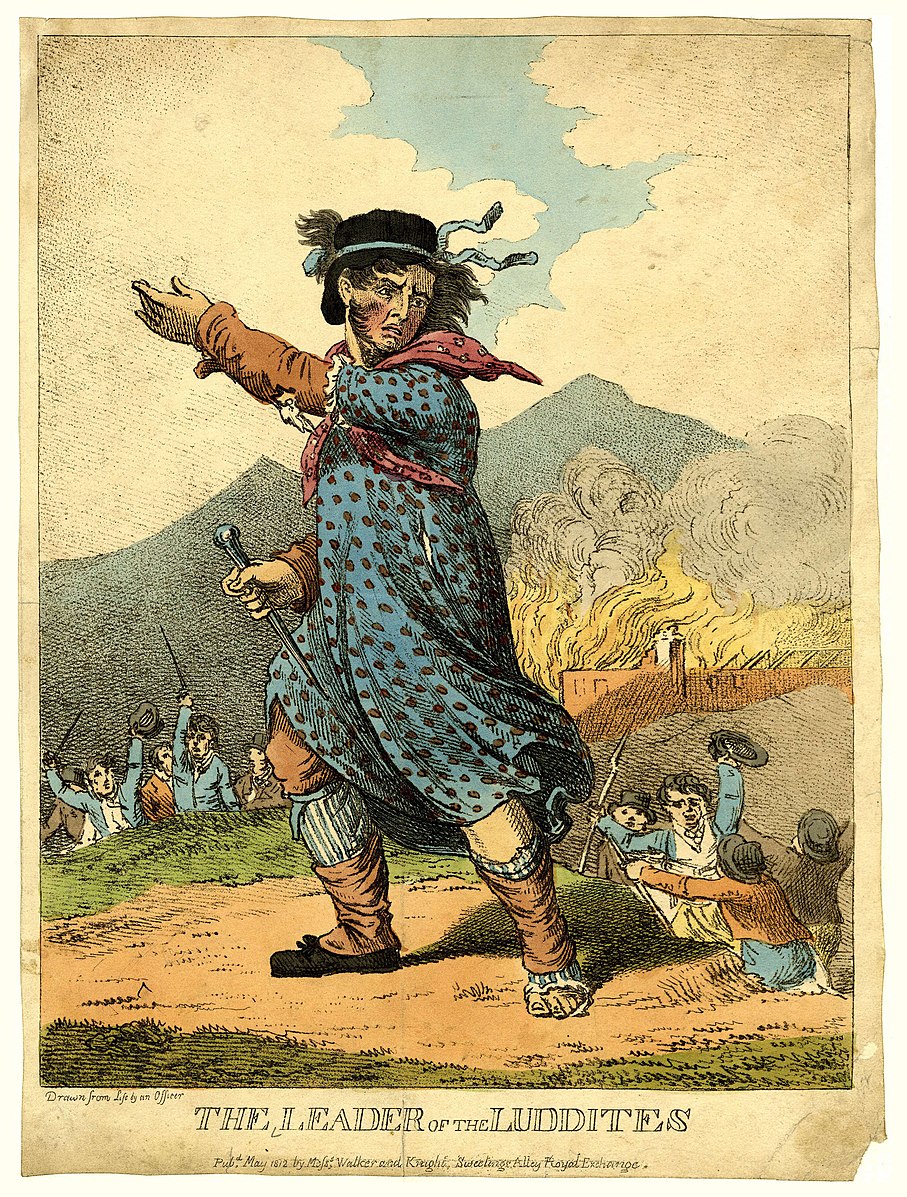

To find answers, we can travel back in time to the Industrial Revolution, a key inception point for understanding the dynamics of technology and human interaction. Much of the grievances against technology echoed in society today can be traced back to the origins of the Luddite movement, a working-class initiative that sought to bring awareness to the inhumane deployment of technology in the workplace. A group of 19th century English weavers and textile workers who opposed the increased use of mechanized looms and knitting frames, the Luddites were famously hated for smashing machines in a grand manifestation of protest. What most people do not know, however, is that at their core, the Luddites championed equitable labor rights, seeing the destruction of their livelihoods by dangerous textile mills that subjected workers to unsafe conditions whilst severely underpaying them. They were not toppled by the inevitable forces of technological progress, but rather the targeted retaliation of domineering forces that sought to silence their complaints. Making machine-smashing an offense punishable by hanging, the English government crushed the movement and sat idly by as factory bosses gunned them down en masse. Despite facing the heavy hand of the government, the movement did not die here, transforming into neo-Luddism and attracting a growing number of adherents to this day. Neo-Luddites draw from the original philosophy of questioning the ethicality of contemporary technological advancements —a line of thinking which may prove much more pertinent to the average technology user than it’s given credit for.

The AI Debate

Just as industrial factory owners failed to consider their laborers’ best interest in the 19th century, contemporary AI companies are likewise complicit in exploiting citizens — in this case, due to their capacity to overtake human jobs and squash livelihoods. OpenAI, the research company behind platforms like ChatGPT and Dall-E, is facing much of the backlash. ChatGPT, which has garnered a record-breaking 100 million users merely two months after its launch, is already rapidly changing the job landscape, leaving many behind. As American economist Harry J. Holzer warns, “automation shifts compensation from workers to business owners who gain higher profits with less need for labor”. High-skilled workers who can perform tasks beyond the abilities of machines by “complementing” the new automation, either through increased education and training, often enjoy rising compensation”. On the other hand, workers whose tasks are largely “substituted” by machines are left worse off. In this case, workers with at least some postsecondary credentials will fare better off due to their adaptability, while those without higher education may be subject to losing their jobs.

AI art platforms like Dall-E and Stable Diffusion also pose issues to smaller scale artists who are trying to make a name for themselves, generating digital art based on an amalgamation of inputs of pre-existing artwork found on the internet. In this sense, bits and pieces of work from smaller artists are stolen and repurposed without their consent, often re-producing their signature styles and motifs, resulting in the loss of the artists’ creative value driving them into obsolescence. To fight back, artists Karla Ortiz, Sarah Andersen and Kelly McKernan have filed a class-action lawsuit in California against Stability AI, Midjourney and the art website DeviantArt, attesting the unauthorized use of artists’ work to train Stable Diffusion AI as a breach of copyright. As one member of the legal team spearheading the lawsuit, Matthew Butterick, has stated, companies like Stable Diffusion AI have conveniently “skipped the expensive part of complying with copyright and compensating artists, instead helping themselves to millions of copyrighted works for free.”

As AI continues to grow and expand into different fields, we must stop to ask ourselves how these technologies are affecting the jobs and livelihoods of the average person. The reality of the situation is that AI is not helping people put food on the table- it is there to serve those who are already well-educated and wealthy. AI will likely widen income inequality, putting increased control in the hands of employers who may choose to displace laborers or reduce their work times. In the event of such changes, who is to say trickle down policies will work in laborers’ best interest?

One indication of this change is already visible in the actions of Facebook’s parent company, Meta. Back in November, they cut 11,000 jobs, and are now setting out to slash 10,000 more in order to funnel a greater amount of investment into advancing AI in their products. The race against other Big Tech companies towards AI domination is quite literally destroying the livelihoods of thousands of employees who will need to seek employment elsewhere.

Mobilizing for Action: Proactive Ethics

This intensified competition led by tech giants like Microsoft and Google perpetuates the rapid roll out of new generative AI tools without consideration for the implementation of explicit, transparent federal AI guidance measures. Companies that should not be trusted are instead largely left to their own devices, setting and following their own guidelines. The risk of producing false information is high in AI innovations that are released before they are completely ready and foolproof. Oftentimes, in a phenomenon the industry dubs “hallucinating”, users must be experts in their field to assess whether or not AI chatbot information generates correct results. By this token, average users are highly susceptible to misinformation. The dissemination of biased content can have grave geopolitical repercussions for elections and the legitimacy of democracy.

The European Union has been trying and failing for years to hold AI companies accountable through regulations like the Artificial Intelligence Act proposed in 2021 meant to counter the malignant effects of AI. As platforms like ChatGPT can be prompted in positive and negative ways, it is nearly impossible to regulate its use. There are so many unknowns in this field, which is precisely why it is all the more important for us to question their ethicality and need for their existence. Companies must look inwards to see if their platforms are actually needed, and if not, the burden of responsibility and accountability must be placed on them. While the industry operates on a ‘move fast and break things’ mentality, more research on evaluating the drawbacks of projects before they are implemented is necessary.

Featured Image: “Robot job takeover unemployment” by Qniksefat is licensed under CC-BY-SA-4.0.

Edited by Sara Parker.