Facebook: Russian Bots and a Crisis of Identity

https://flic.kr/p/2vGjwH

https://flic.kr/p/2vGjwH

Facing recent charges of spreading “fake news” and facilitating foreign interference, Facebook may be compelled to re-market. While not charging the site explicitly, a recent indictment from the Mueller investigation comes as the first official report on Facebook’s significance in Russia’s interference campaign – an implication that needs to be addressed in the near future.

The high-profile investigation on Russian interference of the 2016 elections led by Special Counsel Robert S. Mueller charged 13 Russians and three companies in its latest round of indictments this February. The report details how these Russians primarily used Facebook to spread divisive content while masquerading as Americans. Aside from Russia-sponsored political ads focused on divisive social issues like race, gun control, and immigration, these Russian “trolls” also organized social network groups and tense political rallies. The aim of these bots is clear: divide America.

Russian interference in the U.S. political scene is old news. Unauthorized foreign influence in government, whether it be a hack on the DNC or infiltrating the U.S. Senate computer network, can be fixed almost without debate, by tightening cybersecurity and keeping hackers out. As interference steps into the social sphere through private sector social networks, however, questions of free speech and government intrusion must be tackled first. For sites that have built their name on the free flow of information – such as Facebook – this political interference magnifies into a crisis of identity. Having marketed themselves as the facilitator of positive Internet connection and democracy, Facebook has to face that misuse is also a consequence of its platform.

How Did It Happen?

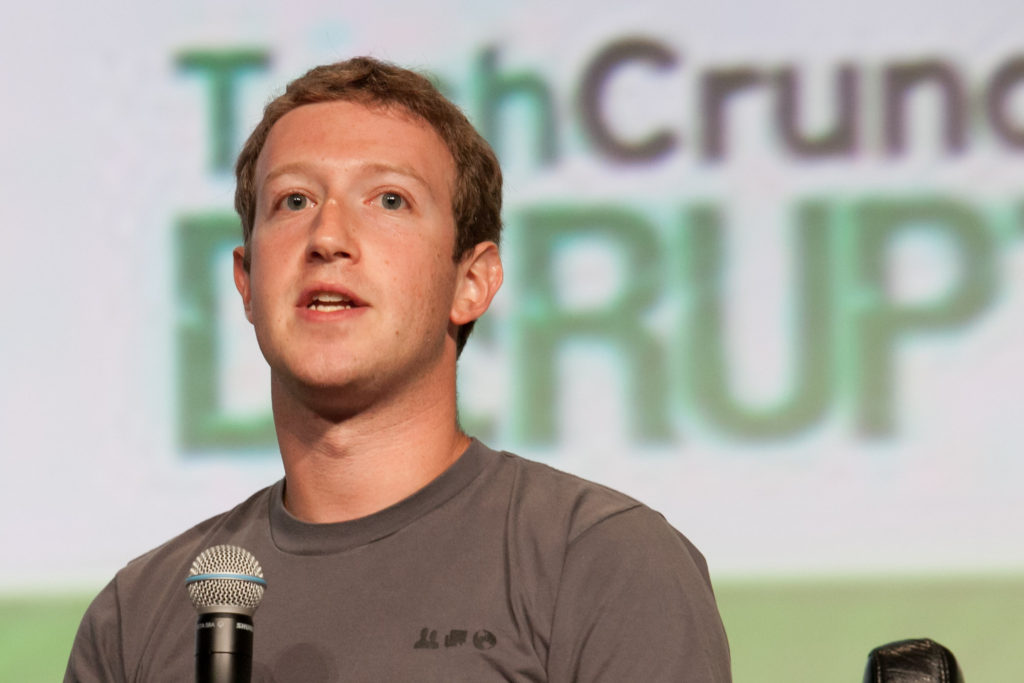

Research by Kevin Roose, a technology writer for the New York Times, found that the Russian bot problem stemmed from Facebook’s News Feed changes in 2013. To compete with Twitter as a social network news source, CEO Mark Zuckerberg’s plan was to enhance the News Feed feature to include published news and political ads, stepping away from the image as merely a “family and friends” site. This new “digital newspaper” would be personally tailored to each individual user, delivering highlights based on previous views or likes.

Roose notes that Facebook quickly received backlash after these changes were implemented, with some sources complaining of liberal bias on their feeds. To correct this potential problem and shield itself from politics, Facebook over-corrected by largely deregulating political or controversial content posted and dehumanizing the algorithm. This massive deregulation scheme presented a perfect opening for the Russian Internet Research Agency – a sketchy company created in 2014 with links to the Kremlin – to begin targeting foreign politics. Using millions of online troll accounts, the Agency used Facebook and other platforms to post divisive political advertisements, lobby controversial issues, organize group rallies, and pick publicized fights.

Taking Responsibility

The Internet is so often seen as affecting positive political change through the spread of information. From facilitating the Arab Spring to promoting robust elections, social media sites seemed to be a tool to unite ideas and challenge corrupt systems. Sites like Facebook had no trouble connecting to this image – as the promoter of democracy.

However, it seems logical that if the Internet could espouse positive change, then it could have a negative influence too. Hit hard in the 2016 election with charges of disseminating “fake news” and divisive content, Zuckerberg’s response was to immediately distance the company from the election’s outcome, scoffing at the accusations that Facebook influenced the results and calling it “a pretty crazy idea.” He argued for voter agency, saying that there is “a profound lack of empathy in asserting that the only reason someone could have voted the way they did is because they saw fake news.”

Following findings from the Mueller investigation, it seems like Zuckerberg was dead wrong. On September 2017 Facebook disclosed that it had identified “$100,000 worth of divisive ads on hot-button issues” purchased by the Internet Research Agency. A month later, the company announced that they would be fully co-operating with the investigation and handing 3,000 Russian-linked political advertisements over to Congress. Opening up further investigations elsewhere, these troll accounts were found to be active all over the world, entangling themselves in other divisive political issues like Brexit and the 2017 French presidential election. As last year progressed, it became clear to Facebook – and the public – that the role that the social networking site played in politics was more than Zuckerberg had anticipated.

The recent indictment in the U.S. makes these implications official. While the charges do not accuse Facebook of explicit wrongdoing, the report clearly states that Russian companies used the site to “subvert the 2016 election and support Donald Trump’s presidential campaign.” Its News Feed format and its position as one of the most frequently-used social media platforms means that the site remains a key target for foreign influencers, and this entanglement can no longer go unacknowledged by Zuckerberg and his team.

Social Media and Democracy: What Can Be Done?

Facebook’s next step must be to take preventative measures. In late October last year, executives from Facebook publically acknowledged their role in the Russian interference scheme at a Congressional hearing. Despite taking 11 whole months to admit to the problem, the executives presented no official proposal to address it. Moreover, Facebook’s VP for ads, Rob Goldman, reacted to the February indictment with no plan of action, maintaining a clear distance from electoral interference accusations.

How, then, might Facebook resolve this issue? To Zuckerberg’s credit, in September he announced a nine-step plan to decrease political interference on his site. This plan, however, seems both vague and lacking in direction. Steps one and two essentially state that Facebook will continue to co-operate with the Russia investigation – a remarkable statement of the obvious. Steps three and four briefly outline how Facebook intends to “make political advertising more transparent.” The latter steps include even vaguer language like a “team working on election integrity,” “increase[d] sharing of threat information,” and “investment in security.” While this sounds like steps in the right direction, these statements still lay no concrete plans as to how Facebook might engage in politics on its platform. What does an “investment in security” mean? How will the lives of everyday users be affected by Facebook’s changes, if they are, in fact, changes?

The only concrete evidence of change from Facebook since the accusations in September has been testing a new version of the News Feed in six countries since October. Created in response to the fake news and Russian interference allegations, Zuckerberg piloted what he called an “Explore” page format, designed to keep disruptive or possibly divisive news content under a different tab and separate from the personal content. However, the company soon faced backlash from local news networks that relied on Facebook as a main source of user traffic, which had taken a hit during the testing period. This solution seemed to simply decrease traffic to all news sites, regardless of credibility, possibly negatively impacting voter information in the future.

Facebook’s vague and maladjusted plan to “strengthen the democratic process” and incite change also raises another – arguably more pertinent – question: Is engaging in the democratic process Facebook’s responsibility? If so, what actually can be done, given its constraints?

While Zuckerberg was too quick to distance Facebook from its responsibility in 2016, he did make a fair point on voter agency. Ultimately, voters are responsible for making up their own minds about who should receive their vote. Companies, groups, and individuals have been trying to influence that choice for centuries, and through Supreme Court precedents such as McCutcheon or Citizens United, the U.S. political system has consistently protected the right to political speech, expression, and support.

While foreign intervention is certainly not a positive, it is a slippery slope to outlaw foreign attempts to influence U.S. voters online. A Russian-led targeted attack on a specific election is certainly prosecutable, but what about all the legitimate users all around the world posting videos or updates demonstrating how unsuitable Trump was? While not specifically directed by a government, might it not have the same effect?

Furthermore, giving a company like Facebook the license to define what is “good” content and censor the “bad” could silence millions of legitimate political voices. The main concern with restrictions of this kind is that, if poorly directed, they have the potential to violate First Amendment rights. The only method that would satisfy the norms of free speech while also tackling Russian interference would be a targeted mechanism of ridding the site of any and all Russian bots, trolls, or accounts that are not legitimate people. Based on the vague strategies proposed and tested by the company so far, the outlook is not promising.

Edited by Alec Regino