Online Platforms and the First Amendment Problem

Although online platforms have existed for decades, their potentially counterproductive influence on electoral integrity has given rise to an impasse. Tech stars, like Facebook, Twitter, and Google, and state bodies are seldom on the same page when it comes to managing the power of technology services, promoting freedom of speech, and protecting First Amendment rights. Actors on each side have actively debated whether global technology firms threaten free expression, and how this can be regulated to preserve Americans’ (and other international users’) rights. Moreover, the two parties represent differing goals and constituents. Whereas legislative bodies operate within government structures and are beholden to the electorate, tech giants must maintain a business model that drives profits for shareholders. As such, both governmental intervention and civil action are required to overcome challenges that powerful tech firms pose to society.

Two sides of the story

Tech executives argue that their role extends beyond their services, as they empower social mediators across all segments of contemporary society. Anyone with the privilege of internet access can speak to a global audience with greater ease than ever before. Under current legislation, companies can freely interpret the bounds of “good faith” content moderation, meaning they claim to be maintaining a healthy level of oversight on harmful content like hate speech, terrorist propaganda, and other forms of “objectionable” expression.

Tech companies’ efforts to justify their intentions would not be needed in the absence of doubters. For example, in 2018, YouTube evaded a lawsuit in which Prager University accused the company of censoring conservative content and having a “political identity and viewpoint.” Ultimately, the case was dismissed because YouTube does not qualify as a “state actor.” In other words, private entities have the same First Amendment rights as individuals, allowing them to make their own decisions about the content on their platforms. This case illustrates how online service providers do not have to be neutral or fact check. Even if they exhibit a strong desire to be neutral, this would be impossible, considering the subjective nature of “objectionable” content, wherein definitions vary based a country’s free speech laws.

Key challenges and unanswered questions

Widespread concern regarding the political and social consequences of tech giants’ unfettered freedom has prompted legal investigations led by the Department of Justice, the Federal Trade Commission, and state-level prosecutors. However, many challenges stand in legislators’ way when deciding on the best legal course of action to govern online service providers.

For one, lawmakers usually struggle to penetrate the digital bubble of software and algorithms that are incomprehensible to most outsiders. Senators often display frustration when trying to get a straight answer from the executives being questioned in congressional hearings. Even if being vague allows these executives to protect their private intellectual property during public hearings, it is unlikely that lawmakers know enough about these companies’ behind-the-scenes content regulation procedures. Capitol Hill recently expressed concern over the spread of misinformation about COVID-19 and climate change on Facebook, subsequently demanding greater information about how the platform handles fact-checking, and if its size impedes this process. However, previous attempts suggest that many lawmakers are technologically inept.

Evelyn Douek’s essay, “The Rise of Content Cartels,” poses a question that encapsulates the two main options considered by government bodies in response to internet giants’ power over free speech standards. Douek asks: “Should platforms work together to ensure that the online ecosystem as a whole realizes these standards, or would society benefit more if it is every platform for itself?”

Douek’s “every platform for itself” option implies enacting stricter antitrust enforcement on these technology companies and promoting healthy competition levels. For instance, Facebook already differs from other social media platforms, like Twitter, due to its less aggressive approach for policing inflammatory comments and fact-checking. By increasing the number of players in the market, the industry may align itself with the type of moderation that is most palatable to consumers. However, this approach has numerous flaws. Considering no “ideal” moderation method has surfaced as of 2020, it is uncertain whether this is possible. Also, if online platforms have not broken any legitimate laws, then arbitrary exercise of antitrust enforcement can be seen as an abuse of power by the government, something the First Amendment is intended to protect private entities from.

The other option entails shared standards, which is arguably harder to put in place, as it requires amending or re-writing current communication and media laws. Mark Zuckerberg expressed his feeling of being caught in the polarity of the American political system, saying: “The Trump administration has said we have censored too much content and Democrats and civil rights groups are saying that we aren’t taking down enough.” The challenge lies in Facebook’s and the government’s ability to find a middle ground between protecting the First Amendment rights of citizens and policing disinformation. Still, whoever defines this middle ground may be subject to ideological bias themselves.

Mark Zuckerberg should listen to his employees and explain what the company will do if Donald Trump uses its platform to try to undermine the results of the presidential election. https://t.co/QfUAmdTL3t

— Elizabeth Warren (@ewarren) August 9, 2020

Senator Elizabeth Warren (D-Mass) calls out Facebook for undermining results of the US presidential election through their content moderation methods. Via Twitter.

What now?

Aside from the government and other actors in positions of power, it is important to remember that members of the public also play an important role by engaging informed discussions about the influence of technology. More individuals need to consider exercising their freedom responsibly. People often view technology companies’ executives as “genius” figures because of their contributions to human society. However, such successes do not mean these individuals are “messiahs,” holding the answers to all of society’s problems. Thus, the public needs to be critical of their actions and claims, and how they affect politics, the law, and free speech.

Whether there will soon be an overarching set of regulations to govern the technology sector remains uncertain, as industry players and legislative bodies have been unable to converge their respective expertise to tackle the current issues of content regulation. In the meantime, it is crucial to be critical of the way the internet currently functions, because each individual is inevitably part of the vision that tech giants set for the future.

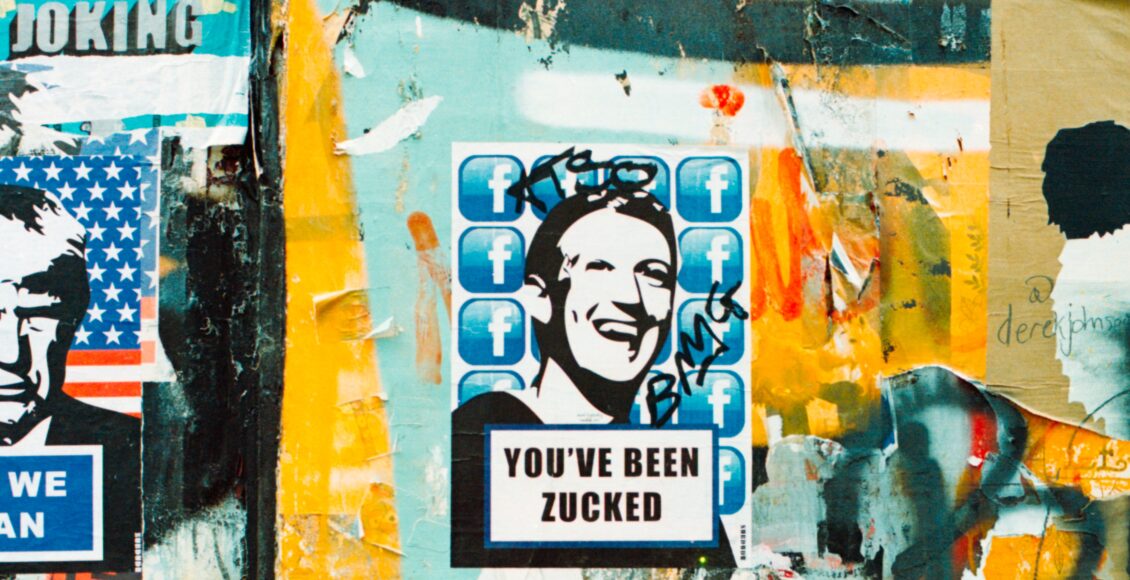

Featured image: “You’ve Been Zucked” by Annie Spratt, via Unsplash.

Edited by Asher Laws